A little-known chip war.

On July 14, 2015, at 19:49, a spacecraft flew past Pluto, once a member of the nine major planets, and took a set of "identity photos" for it. The signal sent out later traveled a distance of 6 billion kilometers and finally arrived at NASA headquarters. After processing, it was published on the internet, allowing billions of ordinary people to see the true appearance of this mysterious planet.

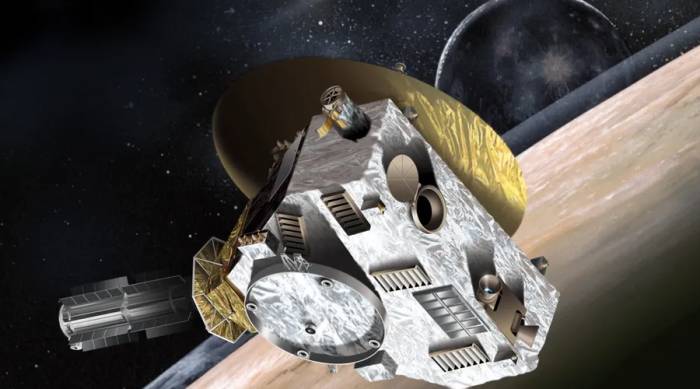

This spacecraft is the New Horizons, which has a speed of about 58,000 kilometers per hour (about 16 kilometers per second). It is also the fastest space vehicle launched by humans to date. From its launch on Earth in 2006 to its flyby of Pluto in 2015, it took more than 9 years. After a long and lonely journey, it finally lifted a corner of the vast universe.

What drives the New Horizons is not a top high-computing chip, but an ordinary MIPS R3000 processor. This processor was first used in workstations and servers in the late 1980s, and its most famous use is the game console PlayStation, which was released in 1994 and sold more than 100 million units. It was officially discontinued until the year New Horizons was launched.

When the New Horizons was infinitely close to Pluto, the PS1 with the same chip was covered with dust in a corner of the closet, and the PS4 released at that time already had thousands of times the performance of the R3000, easily driving the images that used to be rendered by dozens of high-performance computers. The game console born in the last century witnessed a computing revolution.

Advertisement

Behind the evolution of computing power is the continuation of several major processor architectures to this day, as well as the rise and fall of the semiconductor industry in the United States and Japan. Behind the game console is a miniature chip war.

The legend of MOS 6502 and the rise of Japan

In 1973, 35-year-old Chuck Peddle joined Motorola, responsible for the research and development and sales of the famous 6800 processor. When the 6800 was officially launched, the price was as high as 360 US dollars. Peddle believed that this price was beyond the affordability of ordinary families. At this time, he came up with the idea of simplifying the 6800 architecture design and launching a low-priced chip of only 50 US dollars.

But his attempt was quickly stopped by the top management of Motorola. Soon after the 6800 was launched, Peddle left Motorola with his early chip design plan and joined the competitor MOS Technology (MOS). In this company, Peddle and his colleagues successfully completed the simplified version of 6800, the 6501 microprocessor. Because of the same pin, it can even be directly inserted on the 6800 circuit board, which also led to Motorola and MOS going to court.

The result, of course, was that MOS lost and had to adjust the pin configuration of the 6501. In the second half of 1975, the 6502 microprocessor was officially launched, priced at 25 US dollars, only one-sixth of Motorola's 6800 and Intel's 8080.

The cheap price aroused doubts. The US "Electronic Engineering Times" published an interview with Peddle, titled "Do We Need a 20-Dollar Microprocessor?" The beginning of the report was a series of questions: Is the 25-dollar CPU chip from MOS a waste of money for the market? If so, will low profits limit their ability to provide software and services?However, these doubts were ultimately shattered by the consumer market. When manufacturers saw a processor priced at $25 with performance comparable to Motorola and Intel, they quickly opened their wallets to place orders with MOS. Apple I, Apple II, Commodore PET, Acorn Atom, BBC Micro... Personal computers, based on the MOS 6502, entered the market like a spring breeze, sparking a storm of computer popularization in the late 1970s.

Among the early well-known applications of the MOS 6502 was the Atari VCS/2600 gaming console released by Atari in 1977. At that time, personal computers usually cost more than $1,000, so a processor in the tens of dollars would not be much of an obstacle. However, for a gaming console planned to be priced at $199, even a $25 6502 processor was too expensive.

Atari hoped that Peddle could supply two chips for their gaming console, namely the 6502 and the I/O chip (responsible for signal input and output), with a purchase price of $12. In the end, MOS signed this order for a simple reason: after calculation, the material production cost of mass-produced chips was only $4. Although the profit per chip was less than before, the scale effect would bring higher profits.

The CPU eventually mounted on the Atari 2600 was a minor modification of the 6502, the 6507. Compared with the 6502, it changed the 40-pin package to a 28-pin package, reducing a considerable cost. However, this also limited the expressiveness of the host game screen. An 8-bit CPU can usually access 64K of memory at a time, but after the pin change, the MOS 6507 can only access 8K of memory at a time, and in fact, it can only do what a 4-bit CPU can do.

The dilemma of chip performance and price trade-offs was placed in front of manufacturers before personal computers were fully popularized: to reduce costs to achieve scale effects, or to maintain high prices to seize profits?

Regardless of how others choose, Atari and MOS have achieved a win-win situation. By 1982, the Atari 2600 had sold a total of 10 million units. In this year, Apple's Apple II sales were only 279,000 units, and IBM's personal computers also only sold 240,000 units. It can be said that without the heavily discounted MOS 6502, there would be no tens of millions of host market.

The bad news is that the cold winter came quickly. Due to the fact that third parties began to produce Atari console games on their own, and the quality was extremely poor, even Atari itself joined this team, eventually triggering an Atari crash in 1983. The consoles and games in the stores were ignored, a large number of game companies went bankrupt, and Atari itself was not immune.

It is somewhat subtle that at this time, the balance of the world's semiconductors has quietly tilted. On the surface, companies such as Intel, Motorola, and Texas Instruments have unlimited scenery due to their first-mover advantage, but Japan developed DRAM with a national system in the late 1970s, established the VLSI joint research and development body, and quickly seized the world's semiconductor market in the early 1980s.

The huge impact of the Japanese enterprise on the U.S. semiconductor industry, to protect the big or the small, made the proposer of Moore's Law scratch his head. In fact, the prosperity of a country's semiconductor industry often drives the development of consumer electronics, and consumer electronics will in turn feed the chip industry, forming a virtuous cycle. Conversely, it becomes a vicious cycle. When the Atari crash occurred, all companies supplying Atari VCS chips ushered in a long winter.Contrary to this, the Japanese semiconductor industry became a sunrise industry. It goes without saying that the five giants NEC, Toshiba, Hitachi, Fujitsu, and Mitsubishi, and companies like Sharp, Sony, and Panasonic also quickly rose to prominence with the advantage of chip vertical integration.

At that time, after seeing the best-selling Atari, the president of Nintendo, Hiroshi Yamauchi, planned to let the development department design a home gaming console with performance so strong that no competitor could catch up in the short term, and the cost should be as compressed as possible, preferably within 10,000 yen.

It should be noted that 1 US dollar was about 250 yen in the early 1980s, which means designing a gaming console priced at 40 US dollars. What is this concept? In 1982, the production cost of Atari 2600 was around 40 US dollars, and the production cost in the early stage would be even higher.

Why is Nintendo so confident? It is based on the Japanese semiconductor industry at its peak. At that time, Nintendo had already joined hands with Sharp in 1980, using its surplus calculator chips and LCD screens to create Game & Watch, which could be used as an electronic watch and play simple games, priced at 5,800 yen, and eventually became a hit.

Based on this idea, the development department began to look for Japanese companies that could provide chips for the console. However, due to the tight cost control, they were rejected by the five giants. Even if they sold millions, it was still a small profit for large companies, and it was not worth mentioning. It would be better to supply more large machines.

In the end, the order was taken by the little-known Ricoh, which provided chips for Nintendo's console. Ricoh recommended the MOS 6502, which is the customized Ricoh 2A03, to Nintendo. It stated that the chip area of 6502 is 1/4 of the popular Z80 at that time, and the remaining 3/4 can be used for other circuits. In this way, the chip price came within an acceptable range.

It is worth mentioning that at this time, MOS had been acquired by Commodore. Due to patent fees, Nintendo and Ricoh took a "detour" and did not apply for authorization. Instead, they directly played the spirit of craftsmen, deleting the decimal correction part of the patent, retaining other parts of 6502, and reducing the cost to the lowest.

Nintendo also took a gamble and signed a contract with Ricoh for 3 million chips. As for the original plan of 10,000 yen, it was not possible due to the cost, and the final price was set at 15,000 yen.

Nintendo's Family Computer (FC) was officially launched in Japan in 1983. The low sales at the beginning could not hinder its brilliance. With the continuous launch of high-quality games, this console equipped with MOS 6502 set off a home console revolution that was even more than Atari 2600. From New York in the United States to Tokyo in Japan, almost every middle-class family is considering buying FC. A chip from 1975 swept the world in an unexpected way 10 years later.The Debut of Z80 and 68000

As previously mentioned, two processors contemporary with the MOS 6502 have been discussed, one being the Z80 launched by Zilog, and the other being the 6800 released by Motorola, which was followed by the iteration 68000.

Their popularity at the time was not much less, but they were not as successful as the MOS 6502 in the field of gaming consoles. It was not just about the chip price, but also the overt and covert competition among console manufacturers.

Let's talk about the Z80 first. In 1974, after Federico Faggin, a developer of the Intel 8080 processor, left the company, he founded Zilog. The first product he developed with his 11 employees was the 8-bit processor Z80, which was not only compatible with Intel 8080 code but could also execute the CP/M system written for 8080, inheriting the well-developed ecosystem of its predecessor.

In the field of gaming, the Z80 also shone brightly. The arcade game Pac-Man launched by Namco used the Zilog Z80 as its mainboard. As an 8-bit processor, the lifespan of the Z80 was quite rare. Even later arcade systems such as Capcom's CPS1, CPS2, and SNK's Neo Geo still used the Z80 as an auxiliary/sound processor. This was also the main reason why Nintendo once considered using the Z80 as the processor for the Famicom (FC). The same processor could facilitate the porting of arcade games, attracting arcade players to buy.

In the early 1980s, the Z80 officially entered the home console market. The ColecoVision, which was marketed as "bringing arcade games to the home," equipped with a 3.58MHz Zilog Z80A, was once the most popular console in the market after Atari. In 1983, Sega launched the SG-1000, which also used the same model of Z80A - the NEC780C (produced by NEC itself based on the Zilog Z80A). However, their market performance was far from that of the FC at the same time. There were many reasons, the most important of which was that Nintendo used the royalty system to attract a large number of Japanese game manufacturers to develop games for the FC. They used their own arcade development experience and high-quality third-party games to make a name for themselves, while the other two seemed to still be in the Atari era, and it took two or three years to catch up.

Now let's talk about Motorola's 68000 processor. Motorola started the MACSS (Motorola Advanced Computer System on Silicon) project in 1976. In 1979, the Motorola 68000 was successfully developed. It was manufactured using 3.5-micron HMOS technology (i.e., high-performance N-channel metal oxide semiconductor, the predecessor of CMOS), integrating 68,000 transistors, and supporting up to 16MB of memory. Although the 68000 was still a 16-bit processor, it used a 32-bit internal bus, thus it could directly use 32-bit instruction software, which gave it a significant performance advantage over contemporary 16-bit processors.

However, the cost of the 68000 was high, and it took nearly ten years to officially enter the gaming market. The first home console equipped with the 68000 processor was still Sega, which launched the Mega Drive with the Motorola 68000 (7.67MHz), becoming the first truly 16-bit console. The previous Z80 was not wasted and became the audio processing chip of the Mega Drive. This configuration was unprecedentedly powerful at the time.

However, Nintendo's later released Super Famicom (SFC) did not fall behind in performance. At this time, the MOS 6502 had developed several variants, and the SFC chose the 5A22 developed by Ricoh, which originated from the 65C816 developed by WDC, a 16-bit processor based on 6502, with a main frequency of 3.58MHz. Compared with the Mega Drive, the SFC also supported a higher number of colors.

It can be seen that the game consoles of the 1980s actually provided a stage for the various processors that emerged at the end of the 1970s. A single flower does not make spring, but a hundred flowers in full bloom bring spring to the garden. The best among them have continued to this day and are regarded as classics.What deserves more attention is that the popular consoles of the 1980s, such as FC, SFC, and MD, did not directly use processors produced by American companies, but chose to customize through Japanese semiconductor companies. Moreover, the proportion of domestically produced Japanese enterprises in graphics and audio processing is also quite high.

Tens of millions of game consoles brought continuous profits to Japanese semiconductors, becoming a miniature of Japan's consumer electronics and semiconductors conquering the city and defeating American semiconductors.

PowerPC and MIPS, like meteors passing by

There is always a disease after a lot of delicious food, and there is always a disaster after a happy thing. When Japanese companies made a lot of money from game consoles, they neglected their own limitations and strength, and hidden dangers burst out one after another.

Let's talk about Sega. MD achieved an unexpected success in the North American market, selling more than 40 million units. The east is not bright, and the west is bright, which also made it determined to develop a home console. Moreover, because 68000 was used for many arcade boards, it was more convenient to port the rich lineup of Sega arcade games, and finally made Sega make a decision - to continue using the arcade chip.

In terms of specific configuration, SEGA SATURN used two Hitachi SH2 processors, the same as CAPCOM's CPS3 board, with a main frequency of 28MHz. Through decentralized and parallel processing, Saturn has a very strong data processing ability. At the same time, it also used two VDP chips (VIDEO PROCESSOR) to handle screen display. The VDP1 chip is responsible for 2D active block processing, which can be enlarged, reduced, deformed, and rotated, and can also calculate quadrilaterals in software form. The VDP2 chip is responsible for processing the background, with 4 layers of scalable ordinary scrolls, 2 layers of freely rotating scrolls, and a maximum of 5 layers of scrolls displayed on the same screen.

Double CPU plus double image processor, this configuration combination is also very explosive when viewed today. Developers are very headache, and it is difficult to port and develop. It emphasizes 2D performance and soon lost in the strong attack of Sony PS1's 3D games. At this time, Japanese semiconductor companies have been on the decline, and Sega helplessly began to seek help from American companies, even once intending to find Nvidia to customize graphics and audio processing parts.

Sega finally determined the next generation of consoles as Dreamcast, and its hardware configuration is much more reasonable than the previous generation: the CPU is the Hitachi SH4 chip produced by NEC, with a main frequency of 200MHz 128-bit bus (running 32-bit code); the graphics chip is Power VR2, jointly developed by NEC and the British Video Logic company, which can calculate more than 3 million polygons per second. Unfortunately, DC still suffered a big defeat, and under the strong attack of PS2's powerful game lineup, Sega finally left the console stage.

Sony Playstation, which rose in 1994, took a completely different path from Sega. It finally chose MIPS as the CPU architecture and was equipped with a MIPS-R3000A processor, which is the processor mentioned in the previous article, the Horizon spacecraft. Compared with most consoles, it has more powerful 3D performance with the addition of 3D graphics chips.This was the first time MIPS made its debut on a gaming console, and subsequently, several Japanese manufacturers' consoles were equipped with custom versions of MIPS processors, including the N64's R4300 and the PS2's R5900. Through gaming consoles, MIPS became one of the major mainstream architectures at that time.

The rise of MIPS was mainly due to the strong support of American computer manufacturers. However, in the turbulent late 1990s, SGI decided to abandon the MIPS architecture and turn to the development of the IA-64 architecture, completely divesting its MIPS business. The Japanese manufacturers, who had close cooperation with MIPS, were caught off guard. The cooperation had just started to show some promise, and they had hoped to compete with Wintel using the MIPS architecture. But the sudden abandonment left the MIPS-based consoles without a future.

At this time, the Japanese semiconductor industry was left with only a group of weak and sickly companies. Japanese console manufacturers began to directly seek help from American manufacturers, hoping they could provide technical support. The dominant Intel was out of the question, as it looked down on the small market share of consoles with its global PC market share. However, the struggling IBM extended an olive branch.

In 1998, IBM launched the world's first processor with copper interconnects, the PowerPC750. Thanks to the use of copper interconnect technology, the processing performance increased by nearly one-third. At this time, Nintendo happened to come knocking, so based on the PowerPC750, IBM customized the Gekko processor for Nintendo's next-generation console, the NGC. This processor was manufactured using copper interconnects and a 0.18-micron process technology, with an operating frequency of 485MHz, and its performance was much stronger than that of the PS2 console, which continued to use the MIPS architecture.

The giant in the American market, Microsoft, also officially entered the stage at this time. Compared with Japanese manufacturers, Microsoft's entry into the market was much simpler and more straightforward. Others were limited by cost and power consumption and dared not use x86 processors, but Microsoft boldly purchased millions of Pentium 3 chips, using them as the processor for the first-generation Xbox. Its performance further advanced on the basis of the NGC, becoming the best-performing gaming console on the market at that time.

After the PS2, Sony also followed Nintendo's footsteps and cooperated with IBM to develop the Cell processor. In the vision of Sony and IBM, they aimed to create a general-purpose architecture processor like Intel's x86, which could not only be used in the next generation of consoles but also shine in digital home appliances and servers. Unfortunately, this processor, which cost billions of dollars, was ultimately proven to be semi-disabled, and the confident Sony repeated Sega's mistake.

From MIPS to PowerPC, Japanese manufacturers gradually lost their say in processors and followed the American semiconductor companies step by step. What made some Japanese people even more regretful was that many MIPS architecture processors were customized and produced by Japanese semiconductor manufacturers. However, after PowerPC, chip foundry production was completely out of Japan's reach. Want high-performance chips? Then first pay a fee much more expensive than before.

Rise, and then helplessly fall. After the decline of the Japanese semiconductor industry, the once proud Japanese gaming consoles could only make additions and subtractions based on chips designed and led by the United States. Their competitiveness was limited to software rather than hardware, and they were no longer as brilliant as in the 1980s.Epilogue

Nowadays, Sony's PS5 and Microsoft's Xbox Series X/S both feature an x86 processor from AMD, which integrates the functions of CPU and GPU. As for Nintendo's Switch, it has chosen the Tegra X1 processor produced by Nvidia, with the CPU coming from Arm and the GPU being independently developed by Nvidia.

It is difficult to find the presence of Japanese manufacturers in other chips or components of these three consoles. Even the JDI screen used in the initial version of the Switch was quietly replaced by Innolux's LCD and Samsung's OLED. Setting aside Microsoft, the roles of Sony and Nintendo have quietly changed over the past forty years, shifting from defining hardware to defining software.

Of course, it's not just gaming consoles. In the Japanese market, a large number of chips and components in electronic products after the millennium are no longer handled by Japanese companies, but have quietly been replaced by products designed and manufactured by other countries. The Japanese semiconductor industry has lost in the chip war, and the cost is not only the decline in output value and unemployment but also the impact on the consumer electronics market.

When the Japanese semiconductor industry is clamoring to develop 2nm technology, how can they persuade the market and manufacturers to launch products equipped with Japanese chips again?

Leave A Comment